Overview

Measure, read and visualize the temperature, humidity, pressure, light and UV levels. This bundle with accompanying online project shows you how to set-up and read environmental data from the sensors on the Arduino MKR ENV Shield and visualize that data in an Arduino IoT Cloud Dashboard.

Contains the core products to make the Environmental Data on IoT Cloud Project

- 1 x Arduino MKR WIFI 1010 board to manage the calculations and communications thanks to its SAMD21 core and the U-BLOX NINA-W10 module for wi-fi connection.

- 1 x ARDUINO MKR Enviromental Shield rev2 that provides environmental sensors to measure temperature, humidity, pressure, light and UV levels.

Arduino IoT Cloud Compatible

Resources for Safety and Products

Manufacturer Information

The production information includes the address and related details of the product manufacturer.

Arduino S.r.l.

Via Andrea Appiani, 25

Monza, MB, IT, 20900

https://www.arduino.cc/

Responsible Person in the EU

An EU-based economic operator who ensures the product's compliance with the required regulations.

Arduino S.r.l.

Via Andrea Appiani, 25

Monza, MB, IT, 20900

Phone: +39 0113157477

Email: support@arduino.cc

Get Inspired

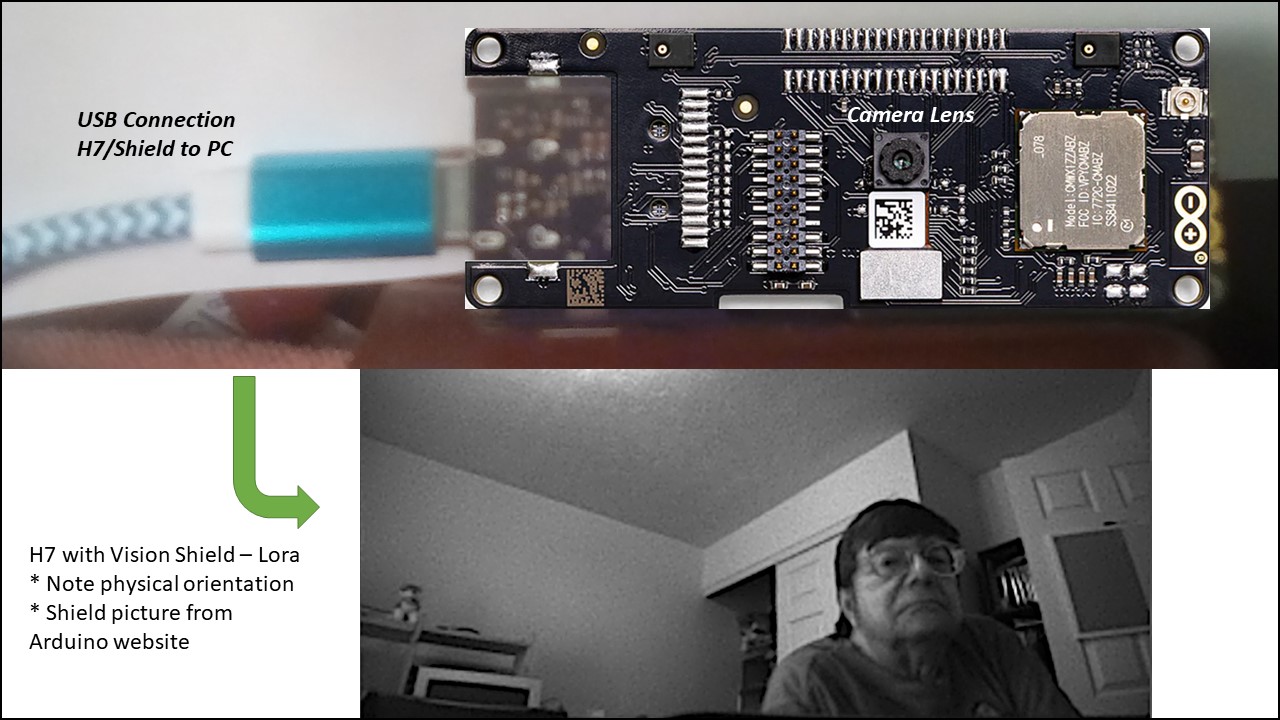

Begins a process of combining Portenta H7 with Vision Shield – LoRa by acquiring, sending, and displaying a camera image via USB/Serial connection.

Jeremy Ellis is a teacher, and as such, wanted a longer-term project that his students could do to learn more about microcontrollers and computer vision/machine learning, and what better way is there than a self-driving car. His idea was to take an off-the-shelf RC car which uses DC motors, add an Arduino Portenta H7 as the MCU, and train a model to recognize target objects that it should follow. After selecting the “RC Pro Shredder” as the platform, Ellis implemented a VNH5019 Motor Driver Carrier, a servo motor to steer, and a Portenta H7 + Vision Shield along with a 1.5” OLED module. After 3D printing a small custom frame to hold the components in the correct orientation, nearly 300 images were collected of double-ringed markers on the floor. These samples were then uploaded to Edge Impulse and labeled with bounding boxes before a FOMO-based object detection model was trained. Rather than creating a sketch from scratch, the Portenta community had already developed one that grabs new images, performs inferencing, and then steers the car’s servo accordingly while optionally displaying the processed image on the OLED screen. With some minor testing and adjustments, Ellis and his class had built a total of four autonomous cars that could drive all on their own by following a series of markers on the ground. For more details on the project, check out Ellis' Edge Impulse tutorial here.