Overview

Nicla Vision allows you to build your next smart project. Ever wanted an automated house? Or a smart garden? Well, now it’s easy with the Arduino IoT Cloud compatible boards. It means: you can connect devices, visualize data, control and share your projects from anywhere in the world. Whether you’re a beginner or a pro, we have a wide range of plans to make sure you get the features you need.

Nicla Vision combines a powerful STM32H747AII6 Dual ARM® Cortex® M7/M4 IC processor with a 2MP color camera that supports TinyML, as well as a smart 6-axis motion sensor, integrated microphone and distance sensor.

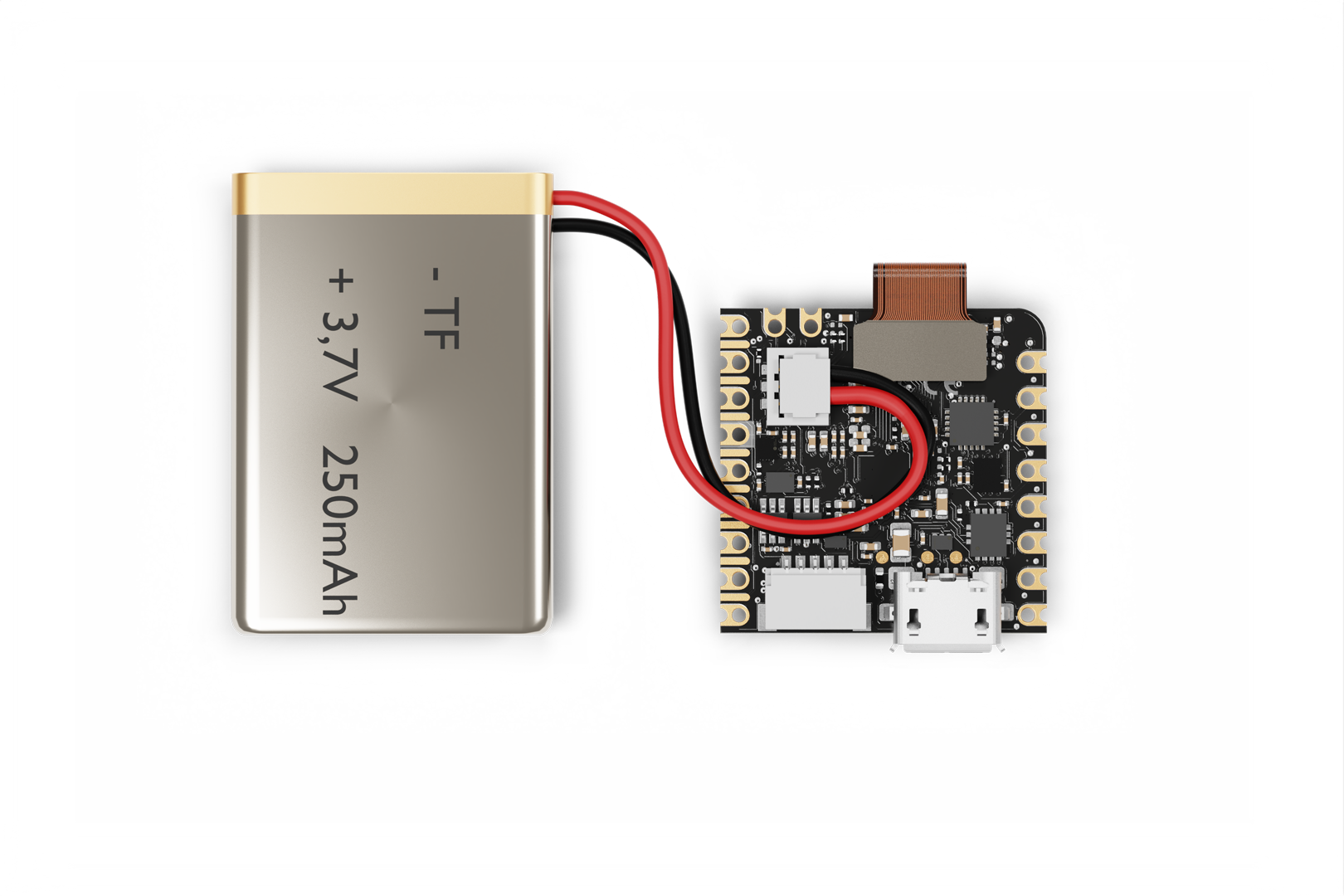

You can easily include it into any project because it’s designed to be compatible with all Arduino Portenta and MKR products, fully integrates with OpenMV, supports MicroPython and also offers both WiFi and Bluetooth® Low Energy connectivity. It’s so compact – with its 22.86 x 22.86 mm form factor – it can physically fit into most scenarios, and requires so little energy it can be powered by battery for standalone applications.

All of this makes Nicla Vision the ideal solution to develop or prototype with on-device image processing and machine vision at the edge, for asset tracking, object recognition, predictive maintenance and more – easier and faster than ever. Train it to spot details, so you can focus on the big picture.

Key benefits include:

- Tiny form factor of 22.86 x 22.86 mm

- Powerful processor to host intelligence on the edge

- Packed with a 2MP color camera that supports TinyML, smart 6-axis motion sensor, microphone and distance sensor

- Wi-Fi and Bluetooth® Low Energy connectivity

- Supports MicroPython

- Standalone when battery powered

- Expand existing project with sensing capabilities, make MV prototyping faster

Automate anything

Check every product is labeled before it leaves the production line; unlock doors only for authorized personnel, and only if they are wearing PPE correctly; use AI to train Nicla Vision to regularly check analog meters and beam readings to the Cloud; teach it to recognize thirsty crops and turn the irrigation on when needed.

Anytime you need to act or make a decision depending on what you see, let Nicla Vision watch, decide and act for you.

Feel seen

Interact with kiosks with simple gestures, create immersive experiences, work with cobots at your side. Nicla Vision allows computers and smart devices to see you, recognize you, understand your movements and make your life easier, safer, more efficient, better.

Keep an eye out

Let Nicla Vision be your eyes: detecting animals on the other side of the farm, letting you answer your doorbell from the beach, constantly checking on the vibrations or wear of your industrial machinery.

It’s your always-on, always precise lookout, anywhere you need it to be.

Arduino IoT Cloud Compatible

Use your MKR board on Arduino's IoT Cloud, a simple and fast way to ensure secure communication for all of your connected Things.

TRY THE ARDUINO IOT CLOUD FOR FREE

Need Help?

Check the Arduino Forum for questions about the Arduino Language, or how to make your own Projects with Arduino. If you need any help with your board, please get in touch with the official Arduino User Support as explained in our Contact Us page.

Warranty

You can find your board warranty information here.

Tech specs

| Microcontroller | STM32H747AII6 Dual Arm® Cortex® M7/M4 IC:

|

| Sensors |

|

| I/O | Castellated pins with the following features:

|

| Power |

|

| Dimensions | 22.86 mm x 22.86 mm |

| Memory | 2MB Flash / 1MB RAM 16MB QSPI Flash for storage |

| Security | NXP SE050C2 Crypto chip |

| Connectivity | Wi-Fi / Bluetooth® Low Energy 4.2 (Murata 1DX - LBEE5KL1DX-883) |

| Interface | USB interface with debug functionality |

| Operating temperature | -20° C to +70° C (-4° F to 158°F) |

Conformities

Resources for Safety and Products

Manufacturer Information

The production information includes the address and related details of the product manufacturer.

Arduino S.r.l.

Via Andrea Appiani, 25

Monza, MB, IT, 20900

https://www.arduino.cc/

Responsible Person in the EU

An EU-based economic operator who ensures the product's compliance with the required regulations.

Arduino S.r.l.

Via Andrea Appiani, 25

Monza, MB, IT, 20900

Phone: +39 0113157477

Email: support@arduino.cc

Documentation

SCHEMATICS IN .PDFPINOUT IN .PDFDATASHEET IN .PDF

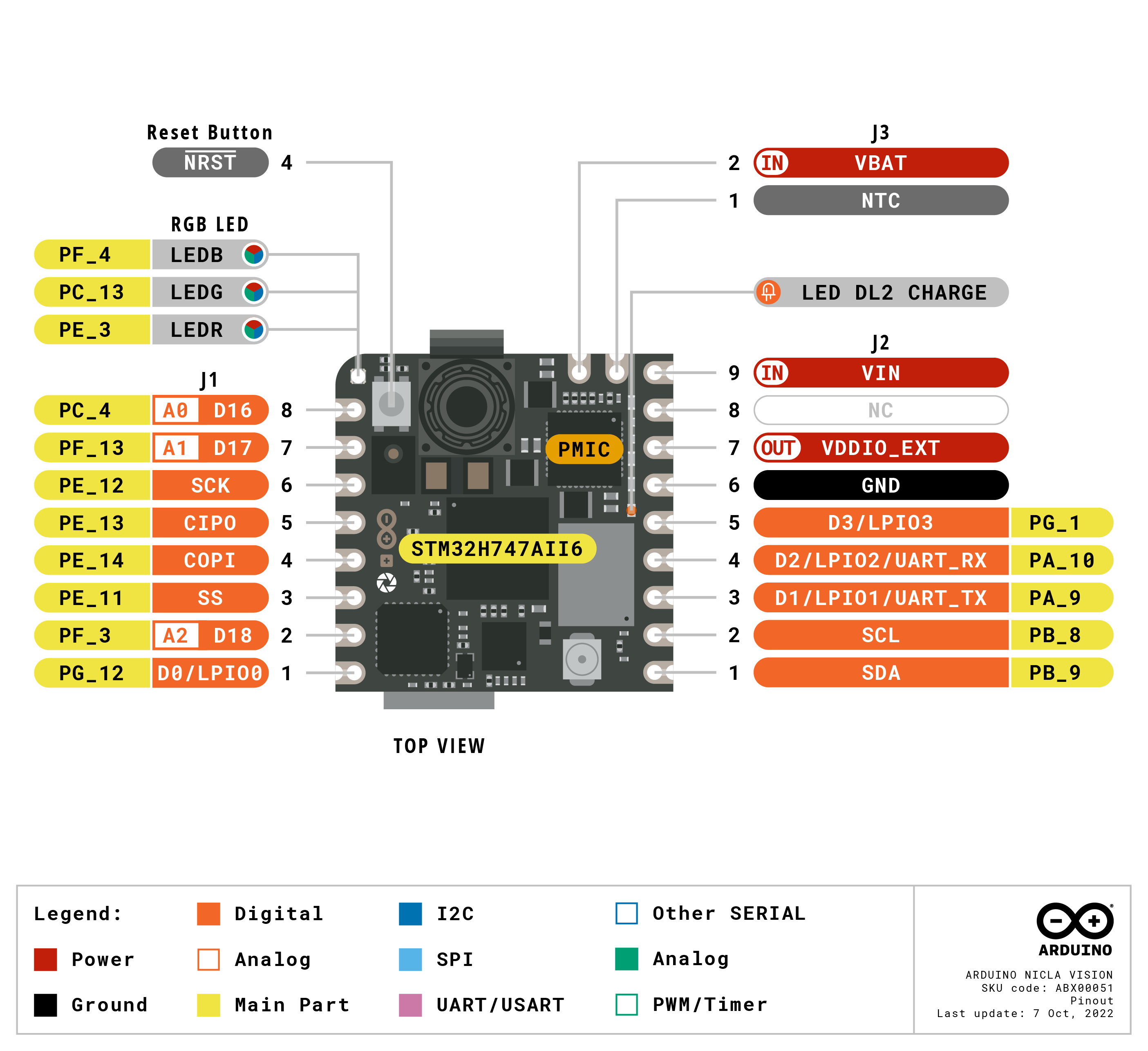

Pinout Diagram

Download the full pinout diagram as PDF here.